Digital Enterprise Journal (DEJ) surveyed and interviewed more than 1,300 organizations to discover best practices for adopting Observability strategies and technology solutions. This research will be published in DEJ’s upcoming study titled “Strategies of Leading Organizations in Adopting Observability” and this Analyst Note summarizes some of the key findings.

The study is leveraging DEJ’s Maturity Framework to identify a class of top performing organizations (TPOs – top 20 of research participants based on their performance) and analyze their strategies and technology capabilities they are deploying. Before we delved into capabilities that these leading organizations are leveraging and that are having the strongest impact on performance, we wanted to answer a few questions that are providing a context for the rest of the study.

Why is Observability such a hot topic?

Sixty-four percent of organizations have deployed, or are looking to deploy Observability capabilities. This ranks Observability as one of the top management concepts in the IT space. There are several reasons why that is the case, but from analyzing the survey data it comes down to five key areas:

- Loss of visibility – 58% or organizations reported that they lost visibility into the digital service delivery chain after conducting modernization projects (cloud migration, deploying microservices, adopting a cloud native approach, new software delivery approaches, etc.). For these organizations, adopting Observability is a way to replace monitoring solutions that are no longer effective.

- Business impact – 52% of Observability deployments are driven by the need to achieve specific business outcomes. DEJ’s recent study on the state of IT performance in 2022 showed that the impact on business outcomes is not only the #1 selection criteria, but it also grew in importance by 32% over the last 12 months.

- Better solution for persistent challenges – It is perceived as a more effective approach for addressing the same challenges that organizations have been struggling with for years. The study shows that some of the key challenges that organizations are looking to address by deploying Observability are: 1) inability to proactively prevent performance issues; 2) reduce time spent on troubleshooting performance incidents; 3) lack of actionable context for monitoring data, etc. These are the same challenges that were on the top of user organizations lists (in both IT Operations and DevOps research) over the last 3-4 years.

- As a term, Observability describes an outcome, not a tool, which resonates better with user organizations.

Do people have a clear understanding of what Observability is?

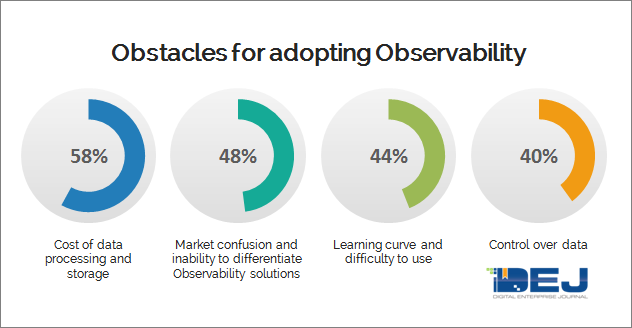

No. Forty-eight percent of organizations reported that market confusion and an inability to differentiate Observability solutions as the key obstacle for deployment. Additionally, the top two definitions of Observability that were selected in the survey were: 1) MELT (metrics, events, logs and traces) based approach to visibility; and 2) new approach to monitoring in complex dynamic environments. However, DEJ’s analysis of practices of TPOs showed:

- There is no significant difference between TPOs and all others when it comes to having all 4 elements of MELT (same goes for 3 “key pillars” – logs, metrics and traces). Therefore, having each of the MELT elements is not a requirement for top performance in adopting Observability.

- Many of the solutions that TPOs are deploying are not defined by strong monitoring capabilities. Effective monitoring of dynamic complex requirements is very important for addressing key challenges, but the study shows that having true Observability requires capabilities that go way beyond monitoring.

How did we get here?

The study shows that we are witnessing a perfect storm of technology and business trends that are driving organizations to redefine their approaches regarding how they think about using technology, managing it and where it fits in their business strategies.

Adopting true Observability addresses each of these market pressures and the study shows that TPOs identified the right mix of capabilities that enables them to turn these dynamics into opportunities and an advantage.

So what really defines Observability?

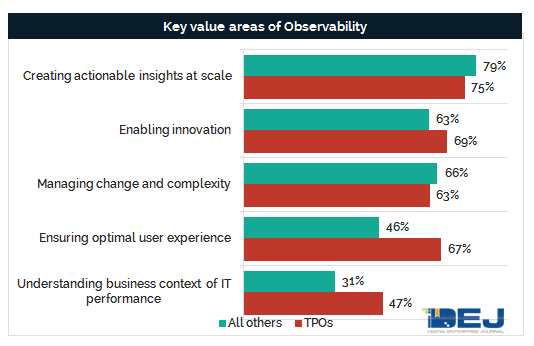

The best way to define any novel technology concept is to simply ask user organizations that are looking to deploy it what it really means to them. The study identified 5 key areas where organizations see the true value of Observability solutions.

There is a lot of value encompassed in these five areas, which also sets a high goal for Observability adoptions. However, the study shows that TPOs are experiencing very good results in each of these domains, which speaks to the value and potential of Observability. If done right.

Observability done right

DEJ’s research examined hundreds of capabilities grouped in four areas – strategy, organization, process and technology – and identified those that are more likely to be deployed by TPOs and are having the strongest impact on performance. DEJ’s analysis below includes a sample of capabilities that are enabling these organizations to outperform their peers when adopting Observability.

Observability is a business play

DEJ’s recent study on The State of IT Performance revealed a 41% increase in “enabling new and unique customer experiences” as the key driver for investing in IT performance technologies over the last 2 years. Additionally, organizations reported delivering exceptional digital experiences as the #1 goal for Observability. Software is increasingly becoming a core part of the business and in order to truly achieve their Observability goals, organizations need to develop a capability that will allow them to understand both software and business cycles in real-time.

Additionally, the research shows that the value proposition of Observability is aligned with all key business goals that executives reported for 2022 (See chart below). The real business value of Observability is not only to help businesses achieve these 8 business goals, but to also accelerate a path to these desired business outcomes. The study shows that TPOs are taking a strategic approach to addressing this area by deploying capabilities, such as using Observability insights to prioritize business decisions, sharing the IT findings with business stakeholders and correlating Observability and business metrics.

Tackling obstacles

The research shows that organizations are facing a number of obstacles when looking to execute on their Observability strategy.

The study shows that TPOs are finding innovative ways to avoid a cost-visibility trade-off. Some of the most effective approaches include:

- Enabling customers to continuously monitor their spend and make intelligent decisions about setting limits that are the most appropriate for their environments

- Enabling real-time analysis of streaming data

- Ability to intelligently direct observability data to areas where it has the most value or the lowest cost of storage

- Reducing the number of streams by unifying data processing

Additionally, TPOs are more likely to be leveraging open source to avoid vendor lock-in and maintain control over the data.

Maximizing open source

Fifty-nine percent of leading organizations reported that they are using open source technologies as elementary pieces for building their Observability solutions. The research also shows that TPOs are 74% less likely to be taking a do-it-yourself approach and rely solely on open source tools.

The study shows that the winning formula for the majority of TPOs is deploying commercial solutions that can seamlessly work with open source technologies in which organizations are skillful in using and maximize their value. This allows organizations to address three of the key obstacles of adopting Observability: 1) vendor lock-in; 2) control over the data; and 3) the learning curve for using these solutions.

Data strategies as the key difference maker

One of the key differences between TPOs and all other organizations is their perception of dealing with large and rapidly increasing amounts of data. TPOs are 80% more likely to report that having more data helps with improving visibility into their environments and creating actionable insights. On the other hand, 56% of all other organizations reported increasing amounts of data as one of the key challenges.

As mentioned above, establishing full observability is a business game, but the research also shows the result of that game is decided by strategies for collecting, managing and analyzing data and delivering it to the right people, at the right time and in the right format. TPOs are also 3.3 times more likely to have defined strategies for Observability data management which allows them to deal with complexity, manage change and have competencies to understand not only what is happening in their environments, but more importantly, why is it happening. They are taking the concept of “creating actionable insights” to a new level and going beyond insights and intelligence to getting answers.

The study also reveals that TPOs’ strongest differentiators when it come to data strategies are in the areas of:

- Correlation and topology

- Eliminating “blind spots”

- Capabilities of time series databases

- Visualization capabilities

- Directing and analyzing data at points where it delivers the most value

Managing Cloud Native

Fifty-seven percent of organizations reported that Observability is a key enabler of adopting a cloud native approach. Additionally, DEJ’s research shows that organizations taking a cloud native approach are 64% more likely to create new technology-driven revenue sources. In order to get full benefits from taking this approach, organizations should be managing each aspect of the cloud native journey and leveraging solutions that are the best fit for these environments. The study shows that TPOs are 60% more likely to use Observability tools that are designed to be used in cloud native environments.

Organization and processes

The study shows that successful Observability deployment and attributes of TPOs go way beyond technology capabilities that they are putting in place. Some of the biggest differences in capabilities of TPOs and all other organizations showed in the following areas:

- Organizational alignment – TPOs are 2.3 times more likely to be centralizing management of their Observability initiatives to ensure they address the key adoption challenges and maximize the value of data for major stakeholders.

- Talent – recruiting and retaining the right talent for managing dynamic environments is becoming more difficult and keeping developers and engineers happy is becoming more important. TPOs are enabling processes designed to reduce frustration of developers and engineers in areas such as spending too much time on troubleshooting, manual tasks that can and should be automated, not receiving data in actionable context, etc.

- Collaboration and workflows – Collaboration and communication are sometimes forgotten areas of effective Observability projects, but the study shows that TPOs are putting a lot of emphasis in these areas. The study reveals that TPOs are 2.3 times more likely to deploy collaboration platforms that are based on context based automation and nearly 3 times more likely to have standard practices for learning from past incidents and “post mortem” analysis.

The study also found that leading organizations are putting processes in place that would allow them to “democratize” benefits of Observability by making them accessible to different teams, job roles and areas of responsibility.

Technology capabilities – 10 magic words

Automation – The study shows that TPOs are more likely to be deploying different types of automation technology capabilities. The research also shows that TPOs are 87% more likely to make automation a core part of their IT and digital transformation strategies.

Reliability – The study shows the importance of reliability from two different perspectives. TPOs are 57% more likely to use the reliability of Observability solutions as a key selection criteria. Additionally, these organizations are more likely to be deploying capabilities that enable them to build innovative digital services and launch them quickly, while ensuring their reliability in production.

Context – Putting data in an actionable context is one of the key attributes of TPOs. Some of the capabilities that these organizations are deploying include an ability to know exactly why changes are happening in their environments or context-based alerting.

Speed – The study shows that TPOs are more likely to be using time to value as a selection criteria for deploying Observability solutions. Additionally, they are deploying capabilities that allow them to significantly reduce time needed to identify a root cause and capture changes in real-time and react quickly. Additionally, TPOs are more likely to be adopting GitOps to accelerate speed of innovation and capabilities for reducing query time.

Scale – Some of the key attributes that separate leading organizations from all others include performance capabilities of time series databases, an ability to scale immediately to meet demand and an aptitude to analyze data sets being generated by IoT and edge deployments.

Prevention – The ability to prevent performance issues before users are impacted is one of the key drivers for adopting Observability. To address this challenge, TPOs are deploying capabilities, such as autonomous anomaly detection and the ability to predict the impact of change on performance.

Real-time – The study shows that capabilities for real-time management are one of the key requirements for successful Observability deployments. As a result, TPOs are more likely to have capabilities for in-stream data analytics, real-time problem detection and alerting and also the ability to detect changes in interdependencies in real-time.

Efficiency – Some of the key capabilities that TPOs are more likely deploying include AI and AIOps functionalities, visibility into how resources are being used and eliminating inefficiencies and applying context based automation for their incident management practices.

Holistic – Some of the capabilities that TPOs are more likely to be deploying include full-stack observability, the ability to capture every change in an environment’s topology and distributed tracing.

Optimization – In order to find the right balance between cost and visibility, leading organizations are deploying capabilities such as ML-enabled data prioritization, the ability to aggregate different data in the same stream and the ability to eliminate log volume by doing away with elements that provide little analytical value.

So what?

DEJ’s recent study revealed that, on average, organizations are losing $7.2 million annually due to a lack of observability. This study dives deeper into the business impact of Observability and shows that this number is even higher and continues to grow. The total business impact of Observability includes several areas that range from managing cost of observability, time spent on non business contributing tasks, revenue opportunities wasted due to slow application releases, issues with user experience and customer satisfaction, loss of top talent, inability to optimize resource utilization, etc.

Summary

The perfect storm of business and technology pressures completely transformed IT performance visibility. Organizations very quickly had to transition from knowing exactly what needs to be monitored and for what. to investigating their environments without knowing what questions to ask. Even though this change requires a major shift in how organizations think about managing digital services, the business benefits they can experience if they effectively manage this transformation are well worth the effort.

The study shows a variety of different challenges that organizations are experiencing and goals that they are looking to achieve. In order to join the class of leading organizations, businesses need to understand that there is no silver bullet for achieving full observability. Observability touches every aspect of the business and, therefore, it should be treated as a strategic initiative and not just another technology investment. As a result, organizations should understand that changes to their organizational alignment and internal processes are just as important as deployments of new technology capabilities.

Selecting the right mix of capabilities that start with strong data management and automation competencies would enable organizations to achieve all 9 key goals (See “Key goals” graphic above)) for Observability which can take their businesses to a completely new level when it comes to market position and potential.